About six months ago, I did a presentation that talks about how to conduct reliable benchmarking in Go. Recently, I submitted an issue #41641 to the Go project, which is also a subtle problem that you might need to address in some cases.

The issue is all about the following code snippet:

|

|

On my target machine (CPU Quad-core Intel Core i7-7700 (-MT-MCP-) speed/max 1341/4200 MHz Kernel 5.4.0-42-generic x86_64), running the snippet with the following command:

|

|

The result shows:

|

|

Is it interesting to you? As you can tell, the measurement without

introducing StopTimer/StartTimer pair is 26ns faster than the one with

the StopTimer/StartTimer pair. How is this happening?

To learn more reason behind it, let’s modify the benchmark a little bit:

|

|

This time, we use a loop of variable k to increase the number of

atomic operations in the bench loop, that is:

|

|

Thus with higher k, the target code grows more costly. Using similar command:

|

|

One can produce similar result as follows:

|

|

What’s interesting in the modified benchmark result is that:

By testing target code with a higher cost, the difference between

with-timer and w/o-timer gets much closer. More specifically,

in the last pair of outputs, when n=100000, the measured atomic operation

only has (444µs-432µs)/100000 = 0.12 ns time difference,

which is pretty much accurate other than the error

(34.8ns-6.44ns)/1 = 28.36 ns when n=1.

Why? There are two ways to trace the problem down to the bare bones.

Initial Investigation Using go tool pprof

As a standard procedure, let’s benchmark the code that interrupts

the timer and analysis profiling result using go tool pprof:

|

|

|

|

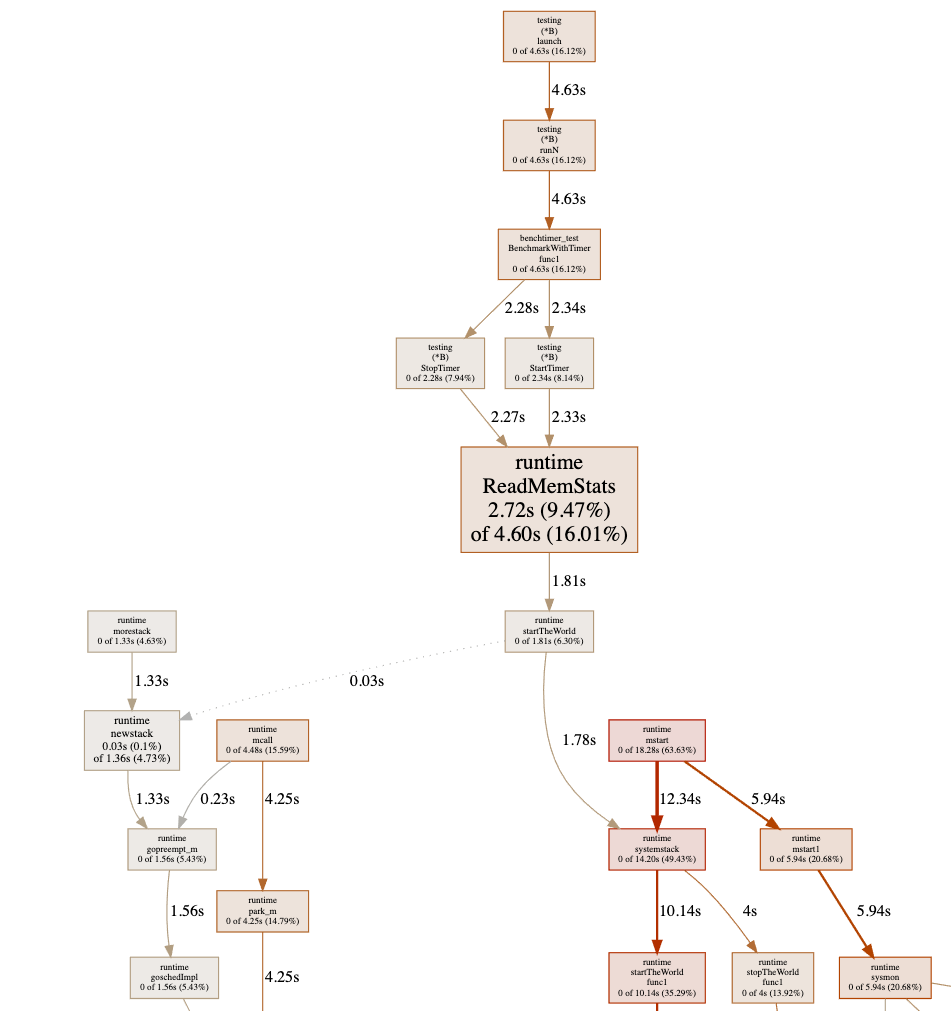

Sadly, the graph shows a chunk of useless information where most of

the costs shows as runtime.ReadMemStats:

This is because of the StopTimer/StartTimer implementation in

the testing package calls runtime.ReadMemStats:

|

|

As we know that runtime.ReadMemStats stops the world, and each call

to it is very time-consuming. Yes, this is yet another known issue

#20875 regarding reduce runtime.ReadMemStats

overhead in benchmarking.

Since we do not care about memory allocation at the moment,

to avoid this issue, one could just hacking the source code by

comment out the call to runtime.ReadMemStats:

|

|

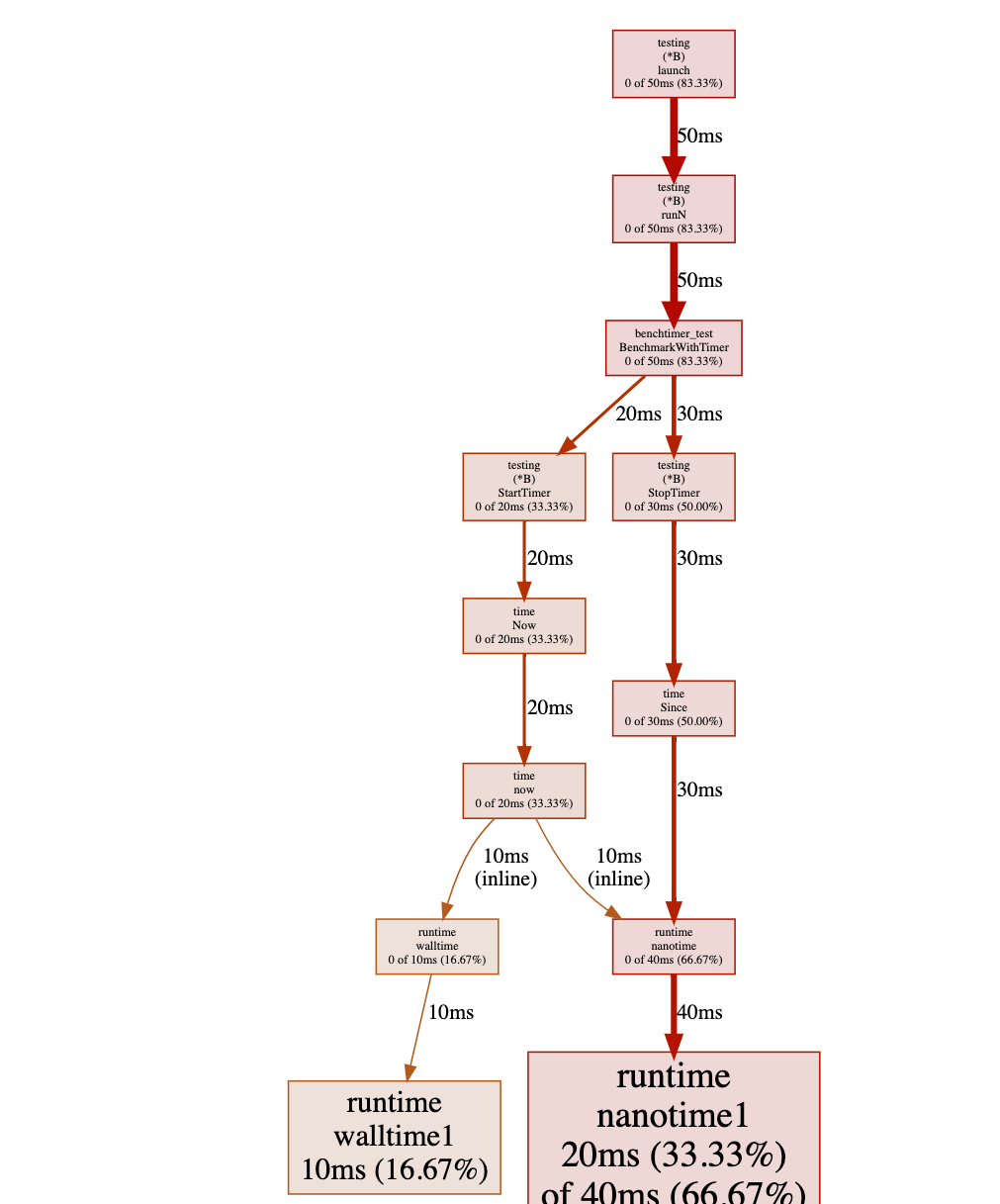

If we re-run the test again, the pprof shows us:

Have you noticed where the problem is? Obviously, there is a heavy cost

while calling time.Now() in a tight loop

(not really surprising because it is a system call).

Further Verification Using C++

As we discussed in the previous section, the Go’s pprof facility has its own problem while executing a benchmark, one can only edit the source code of Go to verify the source of the measurement error. You might want ask: Can we do something better than that? The answer is yes.

Let’s write the initial benchmark in C++. This time, we go straightforward

to the issue of calling now():

|

|

compile it with:

|

|

In this code snippet, we are trying to measure the performance of an empty function.

Ideally, the output should be 0ns. However, there is still a cost in calling

the empty function:

|

|

Furthermore, we could also simplify the code to the subtraction of two now() calls:

|

|

and you will see that the output remains end in the cost of avg since: 16ns.

This proves that there is an overhead of calling now() for benchmarking.

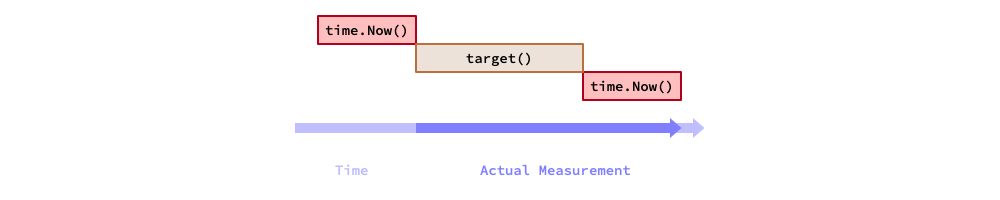

Thus, in terms of benchmarking, the actual measured time of a target code equals

to the execution time of the target code plus the overhead of calling now(),

as showed in the figure below.

Mathematically speaking, assume the target code consumes in T ns,

and the overhead of now() is t ns. Now, let’s run the target code N times.

The total measured time is T*N+t, then the average of a single iteration

of the target code is T+t/N. Thus, the systematic measurement error becomes: t/N.

Therefore, with a higher N, the systematic measurement error gets smaller.

The Solution

Back to the original question, how can we avoid the measurement error?

A quick and dirty solution is subtract the now()’s overhead:

|

|

And in Go, you could do something like this:

|

|

As a take-away message, if you are writing a micro-benchmark (whose runs in nanoseconds), and you must interrupt the timer to clean up and reset some resources for some reason, then you must do a calibration on the measurement.

If the Go’s benchmark facility addresses the issue internally, then it is great; but if they don’t, at least you are aware of this issue and know how to fix it now.

Further Reading Suggestions

- Changkun Ou. Conduct Reliable Benchmarking in Go. March 26, 2020. https://golang.design/s/gobench

- Changkun Ou. testing: inconsistent benchmark measurements when interrupts timer. Sep 26, 2020. https://golang.org/issue/41641

- Josh Bleecher Snyder. testing: consider calling ReadMemStats less during benchmarking. Jul 1, 2017. https://golang.org/issue/20875

- Beyer, D., Löwe, S. & Wendler, P. Reliable benchmarking: requirements and solutions. Int J Softw Tools Technol Transfer 21, 1–29 (2019). https://doi.org/10.1007/s10009-017-0469-y